1. 环境准备 (30mins)

1.1 准备机器

- 建议操作系统 :OpenEuler 20.03

建议机器配置

- 推荐配置:建议 8 核 32G,硬盘 200G 以上

- 机器数量:7 台

- 选择第一台为中控机进行安装部署操作,使用 root 账号登录。

- 在每台机器上创建一个 /data 目录(建议挂载独立的磁盘或者分区)

mkdir /data - 已准备服务器信息如下:

| IP | 主机名 | Mac地址 | YUM源(在线/离线) |

|---|---|---|---|

| 172.16.32.110 | weops-ha1 | 00:50:56:93:71:87 | 在线 |

| 172.16.32.111 | weops-ha2 | 00:50:56:93:94:6d | 在线 |

| 172.16.32.112 | weops-ha3 | 00:50:56:93:98:29 | 在线 |

| 172.16.32.113 | weops-ha4 | 00:50:56:93:f8:71 | 在线 |

| 172.16.32.114 | weops-ha5 | 00:50:56:93:aa:0c | 在线 |

| 172.16.32.115 | weops-ha6 | 00:50:56:93:75:3e | 在线 |

| 172.16.32.116 | weops-ha7 | 00:50:56:93:4f:9a | 在线 |

1.2 准备介质包(上传至中控机)

| 序号 | 介质包名 | 文件名 | 上传路径 | 备注 |

|---|---|---|---|---|

| 1 | 平台介质包 | weops-release-4.15LTS-euler2003.tar.gz | /data/ | 文件名以实际出包为准 |

| 2 | 证书文件介质包 | ssl_certificates.tar.gz | /data/ | 10.10.24.129-00:50:56:93:58:a6(IP-MAC) |

| 3 | WeOps介质包 | 嘉为科技-weops-blackall-20210714105936.tar.gz | /data/ | 文件名以实际出包为准 |

| 4 | 高可用适配包 | ha-lts.20250418.tgz | /data/ | 文件名以实际出包为准 |

1.3 准备基础环境(10mins)

安装常用源以及工具

# 操作主机:每台服务器

sed -i 's/#UseDNS yes/UseDNS no/g' /etc/ssh/sshd_config

sed -i '/Banner/s/^/#/g' /etc/ssh/sshd_config

echo "" > /etc/motd

systemctl restart sshd

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

yum -y install createrepo rsync tar wget vim telnet bind-utils net-tools

cat >> /etc/security/limits.conf << EOF

root soft nofile 102400

root hard nofile 102400

EOF

# 禁用 motd 提示,否则 init topo 会失败

rm -f /etc/motd

rm -f /etc/motd.d/*

rm -f /etc/issue

rm -f /etc/issue.net

rm -f /etc/issue.d/cockpit.issue配置免密

# 操作主机:中控机

ssh-keygen # 一直按回车确认

ssh-copy-id xx.xx.xx.xx # 分别添加各台服务器IP,包括本机解压相关资源包

# 解压套餐包

cd /data

# 包名请根据实际情况填写

tar xf weops-report_*.tgz

tar xf weops-release-6.1.2-*.tar.gz

tar xf weops_deployment_4.10_*.tgz

tar xf ha-lts.*.tgz

# 解压各个产品软件包

cd /data/src/; for f in *gz;do tar xvf $f; done

# 解压证书包

install -d -m 755 /data/src/cert

tar xvf /data/ssl_certificates.tar.gz -C /data/src/cert/

chmod 644 /data/src/cert/*

# 拷贝 rpm 包文件夹到 /opt/ 目录

cp -a /data/src/yum /opt

# 备份并替换高可用脚本文件

mv /data/install{,_bak}

rsync -a /data/ha/ /data/install/

# 替换高可用模板文件

cp -vf /data/ha-templates/cmdb/* /data/src/cmdb/support-files/templates/

cp -vf /data/ha-templates/job/* /data/src/job/support-files/templates/配置 install.config

说明:

- gse 与 redis 需要部署在同一台机器上。

- 当含多个内网 IP 时,默认使用 /sbin/ifconfig 输出中的第一个内网 IP。

- 当使用客户提供的nfs时,可以跳过nfs部署步骤,手动添加/etc/fstab的挂载

## 请遵循下述 install.config 文件配置

# 请根据实际机器的 IP 进行替换第一列的示例 IP 地址,确保所有 IP 之间能互相通信

cat << EOF >/data/install/install.config

172.16.32.110 paas,gse,redis(master),iam,ssm,license,appo,consul,weopsconsul(init),casbinmesh(init),weopsproxy

172.16.32.111 paas,gse,redis(slave),iam,ssm,license,appo,consul,influxdb(bkmonitorv3),monitorv3(grafana),monitorv3(influxdb-proxy),monitorv3(ingester),monitorv3(monitor),monitorv3(transfer),weopsconsul,casbinmesh

172.16.32.112 influxdb(bkmonitorv3),monitorv3(grafana),monitorv3(influxdb-proxy),monitorv3(ingester),monitorv3(monitor),monitorv3(transfer),appt,es7,kafka(config),zk(config),nfs,weopsproxy

172.16.32.113 cmdb,mongodb,nodeman(nodeman),rabbitmq,usermgr,nginx,redis_sentinel,appt,trino,datart(init),minio,kafkaadapter,vector

172.16.32.114 cmdb,mongodb,nodeman(nodeman),rabbitmq,usermgr,consul,redis_sentinel,mysql(slave),prometheus(master),echarts,vault(init),automate(master),weopsrdp,guacd,minio,kafkaadapter,vector

172.16.32.115 job,es7,kafka(config),zk(config),mysql(master),weopsconsul,casbinmesh,trino,echarts,minio,datart,monstache,age

172.16.32.116 job,es7,kafka(config),zk(config),nginx,mongodb,redis_sentinel,mysql(slave),prometheus(slave),vault,automate,weopsrdp,guacd,minio,echarts,monstache,age

EOF自定义域名、安装目录以及登录密码

- 部署前自定义域名以及安装目录

$BK_DOMAIN:需要更新的根域名。

$INSTALL_PATH:自定义安装目录。

# 执行前请使用实际的二级域名 (如:bktencent.com) 和安装目录进行替换

cd /data/install

./configure -d $BK_DOMAIN -p $INSTALL_PATH- 部署前自定义 admin 登录密码

请使用实际的自定义密码替换 BlueKing。

cat > /data/install/bin/03-userdef/usermgr.env << EOF

BK_PAAS_ADMIN_PASSWORD=BlueKing

EOF- 部署前自定义NFS主机IP,请根据实际情况填写 IP

vim /data/install/bin/03-userdef/global.env

BK_NFS_IP=10.10.25.159- 部署前自定义中控机IP

vim /data/install/.controller_ip

2. 开始部署平台 (105mins)

注:以下安装命令都在中控机的/data/install/下执行

2.1 平台初始化并检查环境 (5mins)

# 操作主机:中控机

# 操作路径:/data/install/

# 初始化环境

cd /data/

tar xf patch_for_euler-*.tar.gz -C /data/install/

cd /data/install/

./patch_for_euler.sh init_for_lib64

./bk_install common

# 校验环境和部署的配置

./health_check/check_bk_controller.sh

# 测试服务器 cpu 性能,结果为 2000 以下的需要注意平台使用会慢一些

yum -y install sysbench

sysbench cpu --cpu-max-prime=20000 --threads=16 run | grep 'events per second'2.2 平台部署 (80mins)

1.部署平台

# 操作主机:中控机

# 操作路径:/data/install/

cd /data/install

## 部署 paas 前置准备

pcmd -m nfs 'mkdir -p /data/bkce/public/nfs/open_paas'

./patch_for_euler.sh init_platform

./bkcli sync common

./bkcli install nfs

# 部署paas,安装 PaaS 平台及其依赖服务,该步骤完成后,可以打开 PaaS 平台 (15mins)

./bk_install paas

# 部署app_mgr,部署 SaaS 运行环境,正式环境及测试环境 (12mins)

./bk_install app_mgr

## 部署 cmdb 前置准备

./bkcli sync cmdb

# 部署 CMDB,安装配置平台及其依赖服务 (5mins)

./bk_install cmdb

# 部署 job,安装作业平台后台模块及其依赖组件 (8mins)

./bk_install job

# 部署 bknodeman,安装节点管理后台模块、节点管理 SaaS 及其依赖组件 (10mins)

chmod 777 /tmp/

./bk_install bknodeman

sed -i "/启动elasticsearch/a $CTRL_DIR/pcmd.sh -m es7 "sed -i '/Service/a LimitMEMLOCK=infinity' /usr/lib/systemd/system/elasticsearch.service"" bin/install_es.sh

sed -i "/pcmd/a $CTRL_DIR/pcmd.sh -m es7 "systemctl daemon-reload"" bin/install_es.sh

./bkcli sync common

# 部署 bkmonitorv3,安装监控平台后台模块、监控平台 SaaS 及其依赖组件 (25mins)

./bk_install bkmonitorv3- 部署官方自带saas

请按顺序执行 :

# 操作主机:中控机

# 操作路径:/data/install/

cd /data/install

# 权限中心 (2mins)

./bk_install saas-o bk_iam

# 用户管理 (2mins)

./bk_install saas-o bk_user_manage2.3 初始化蓝鲸业务topo (5mins)

# 操作主机:中控机

# 操作路径:/data/install/

./bkcli initdata topo注意:若初始化拓扑报错,请参考附录”初始化拓扑报错”处理后,再进行初始化。

2.4 检查相关服务状态 (3mins)

# 操作主机:中控机

# 操作路径:/data/install/

# 加载蓝鲸相关维护命令

source ~/.bashrc

cd /data/install/

./bkcli status all

echo bkiam usermgr paas cmdb gse job consul | xargs -n 1 ./bkcli check2.5 检查weops服务器的host信息 (2mins)

# 操作主机:中控机

# 操作路径:/data/install/

cd /data/install

pcmd -m all 'cat /etc/hosts'

# 返回结果需包含以下内容,若存在host信息缺失,需手动进行添加host信息

172.16.32.113 paas.weops.ha cmdb.weops.ha job.weops.ha jobapi.weops.ha weops.weops.ha

172.16.32.116 paas.weops.ha cmdb.weops.ha job.weops.ha jobapi.weops.ha weops.weops.ha

172.16.32.113 nodeman.weops.ha

172.16.32.114 nodeman.weops.ha3. 验证访问paas平台

3.1 DNS配置

如暂时未将weops域名添加至DNS服务器解析,可直接添加hosts记录访问weops。

- Windows配置

用文本编辑器(如Notepad++)打开文件:

C:WindowsSystem32driversetchosts

将以下内容复制到上述文件内,并将以下 IP 需更换为本机浏览器可以访问的 IP,然后保存。

# 操作主机:客户端

# 将以下内容复制到上述文件内,注意将以下IP和域名需更换为实际IP和域名,然后保存。

172.16.32.113 paas.weops.ha cmdb.weops.ha job.weops.ha jobapi.weops.ha weops.weops.ha

172.16.32.116 paas.weops.ha cmdb.weops.ha job.weops.ha jobapi.weops.ha weops.weops.ha

172.16.32.113 nodeman.weops.ha

172.16.32.114 nodeman.weops.ha**注意:172.16.32.113、172.16.32.116为 nginx 模块所在的机器,172.16.32.113、172.16.32.116 为 nodeman 模块所在的机器,查询方式如下:

# 操作主机:中控机

# 操作路径:/data/install/

# 查询模块所分布在机器的方式:

grep -E "nginx|nodeman" /data/install/install.config3.2 获取管理员账户及密码

# 操作主机:任意一台机器

# 执行下述命令,获取管理员账号和密码

grep -E "BK_PAAS_ADMIN_USERNAME|BK_PAAS_ADMIN_PASSWORD" /data/install/bin/04-final/usermgr.env

set | grep 'BK_PAAS_ADMIN'4. 开始部署 WeOps (90mins)

4.1 WeOps 依赖组件安装 (50mins)

WeOps 自有组件部署均依赖主机已安装docker,组件分布已在上文中定义好,部署动作均封装至bkcli中。

- 初始化WeOps组件部署环境

#!/bin/bash

# 在中控机运行

# 为所有蓝鲸服务器安装docker

cd /data/install

./bkcli install docker

# 导入现有镜像

find /data/weops/components -type f -name "*.tgz" -exec sh -c 'docker load < "{}"' ;

find /data/ha/imgs -type f -name "*.tgz" -exec sh -c 'docker load < "{}"' ;

find /data/weops-report -type f -name "*.tgz" -exec sh -c 'docker load < "{}"' ;

# 初始化weops repo,用于内网环境下的镜像分发

bash init_weops_images.sh

# 初始化

bash bin/generate_weops_generate_envvars.sh- 部署WeOps组件

#!/bin/bash

# 在中控机运行

# 部署WeOps自有组件

# 组件的安装和服务主从动作都封装在bkcli 相关脚本中,无需手动操作

# WeOps基础功能组件

./bkcli install echart

./bkcli install vault

./bkcli install weopsrdp

./bkcli install minio

./bkcli install casbinmesh

./bkcli install monstache

./bkcli install age

./bkcli install automate

# 监控扩展组件

./bkcli install weopsconsul

./bkcli install prometheus

./bkcli install weopsproxy

./bkcli install vector

# 报表分析组件

./bkcli install trino

./bkcli install datart

# 安装完成后检查状态

echo weopsconsul weopsproxy trino datart minio prometheus vault automate age monstache casbinmesh vector weopsrdp|xargs -n 1 ./bkcli status初始化datart

初始化datart的mysql数据源,在任意一台datart服务器操作

#!/bin/bash source /data/install/utils.fc curl -s 'http://localhost:8080/api/v1/users/login' -i -H 'Content-Type: application/json' -d '{"username":"admin","password":"WeOps2023"}' --compressed --insecure > /tmp/datart.login export AUTH=$(grep Authorization /tmp/datart.login) cat << EOF > /tmp/payload.json { "config": "{"dbType":"MYSQL","url":"jdbc:mysql://${BK_MYSQL_IP}:3306","user":"root","password":"${BK_MYSQL_ADMIN_PASSWORD}","driverClass":"com.mysql.cj.jdbc.Driver","serverAggregate":false,"enableSpecialSQL":false,"enableSyncSchemas":true,"syncInterval":"60","properties":{}}", "createBy": "1e7f1b3aac2247008e5e0e9be07da599", "createTime": "2023-05-30 17:33:24", "id": "e866c2e14c0b4aaf956110a63f7d5ba6", "index": null, "isFolder": false, "name": "mysql", "orgId": "b044363c75a34df8b40e26c1a61e5dde", "parentId": null, "permission": null, "schemaUpdateDate": "2023-06-05 10:42:16", "status": 1, "type": "JDBC", "updateBy": "1e7f1b3aac2247008e5e0e9be07da599", "updateTime": "2023-05-30 17:42:01", "deleteLoading": false } EOF curl -s 'http://localhost:8080/api/v1/sources/e866c2e14c0b4aaf956110a63f7d5ba6' -X 'PUT' -H "${AUTH}" -H 'Content-Type: application/json' --data-binary @/tmp/payload.json --compressed --insecure && rm -vf /tmp/payload.json /tmp/datart.login

4.2 WeOps 集合SaaS部署 (15mins)

执行脚本 install_saas.sh 安装 对应的 app_code

例如:./install_saas.sh monitorcenter_saas

脚本会对已经安装好 app_code 放入标记文件 .step_canway_saas ,再次执行如果 app_code 已在该文件中,就会跳过安装,如需要重新安装,编辑该文件去掉对应的 app_code 即可。

## 安装 saas,注意请按以下顺序正确安装 saas

# patch for ha

cd /data/install

./patch_for_euler.sh ha

# 部署 saas

./install_saas.sh monitorcenter_saas # 部署统一监控中心 (2mins)

./install_saas.sh cw_uac_saas # 部署统一告警中心V2.0 (2mins)

./install_saas.sh bk_itsm # 部署itsm (4mins)

./install_saas.sh bk_sops # 部署标准运维,若有提示,输入y部署即可 (3mins)

./install_saas.sh weops_saas # 部署weops4.0 (2mins)- 部署 kafka-adapter

kakfa adapter 需在部署saas后才能获取到app auth token

#!/bin/bash

# 操作主机:中控机

cd /data/install

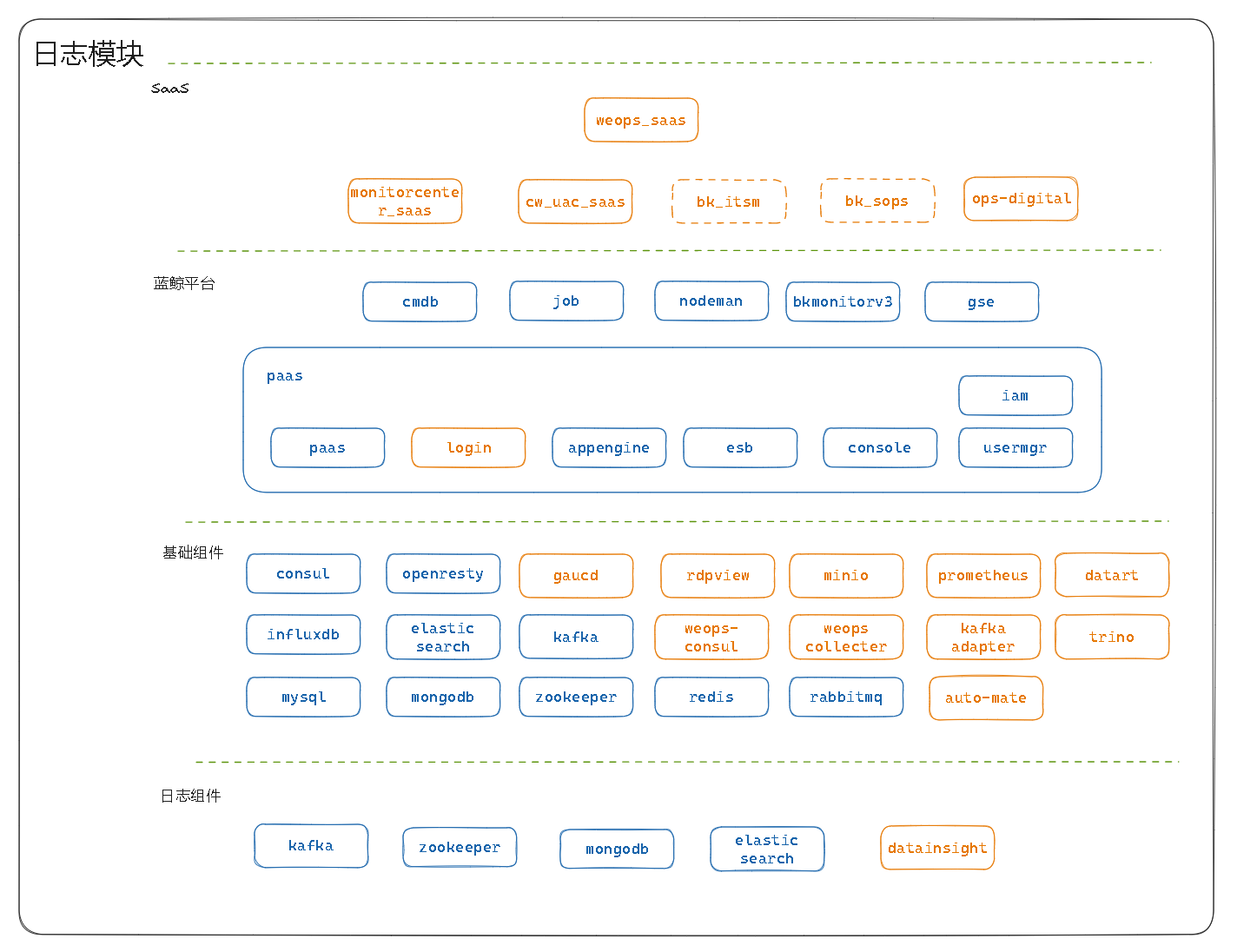

./bkcli install kafkaadapter4.3 部署 DataInSight

说明:日志模块为三节点,依赖 docker 和 docker-compose。且消耗资源较多,建议独立三节点部署

资源情况:建议每台 6C、12G、200G,资源情况可根据实际情况调整

开始部署 WeOps 日志模块

自3.13版本开始,WeOps 周边组件的镜像统一托管至docker-bkrepo.cwoa.net/ce1b09/weops-docker/仓库,可从公网访问,当客户环境离线时,请手动下载对应镜像,WeOps V4.11 日志模块依赖镜像清单如下:

| 镜像名称 | 镜像tag | 镜像描述 |

|---|---|---|

| docker-bkrepo.cwoa.net/ce1b09/weops-docker/mongo | 4.2.0 | 元数据存储 |

| docker-bkrepo.cwoa.net/ce1b09/weops-docker/opensearch | v1.1.0 | 日志数据存储 |

| docker-bkrepo.cwoa.net/ce1b09/weops-docker/datainsight | 20240510 | 日志服务主体 |

| docker-bkrepo.cwoa.net/ce1b09/weops-docker/bitnami/kafka | 3.6 | 依赖组件 |

部署 docker

## 三台日志节点分别执行

# 安装 Docker 的依赖项

yum install -y yum-utils device-mapper-persistent-data lvm2

# 添加 Docker 的 yum 源

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

# 安装 Docker

yum install docker-ce docker-ce-cli containerd.io

# 启动 Docker

systemctl start docker部署 docker-compose

## 三台日志节点分别执行

# 在线环境下:

curl -o /usr/local/bin/docker-compose https://gh-proxy.canwaybk.cn/https://github.com//docker/compose/releases/download/v2.10.2/docker-compose-linux-x86_64

chmod +x /usr/local/bin/docker-compose

# 离线环境下可手动从此url获取docker-compose

https://gh-proxy.canwaybk.cn/https://github.com//docker/compose/releases/download/v2.10.2/docker-compose-linux-x86_64日志节点一执行

部署日志模块组件

## 需要注意给下方 ip 手动赋值,并确保三台日志节点都可以互相通信

# 分别为三台日志服务器的 ip

export log_ip_1=

export log_ip_2=

export log_ip_3=

mkdir -p /data/weops/datainsight/configs

cat << EOF > /data/weops/datainsight/docker-compose.yaml

version: '3.1'

services:

opensearch1:

container_name: opensearch1

image: docker-bkrepo.cwoa.net/ce1b09/weops-docker/opensearch:v1.1.0

network_mode: host

volumes:

- opensearch-data:/usr/share/opensearch/data

environment:

- node.name=${log_ip_1}

- node.master=true

- cluster.name=datainsight

- discovery.seed_hosts=${log_ip_2},${log_ip_3}

- cluster.initial_master_nodes=${log_ip_1},${log_ip_2},${log_ip_3}

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms1024m -Xmx1024m"

- "plugins.security.disabled=true"

ulimits:

memlock:

soft: -1

hard: -1

kafka1:

image: docker-bkrepo.cwoa.net/ce1b09/weops-docker/bitnami/kafka:3.6

container_name: kafka1

network_mode: host

environment:

- KAFKA_KRAFT_MODE=true

- KAFKA_KRAFT_CLUSTER_ID=bI5SSUmBTnemLV8WoOjGfw

- KAFKA_CFG_CONTROLLER_LISTENER_NAMES=CONTROLLER

- KAFKA_CFG_PROCESS_ROLES=controller,broker

- KAFKA_CFG_INTER_BROKER_LISTENER_NAME=PLAINTEXT

- KAFKA_KRAFT_BROKER_ID=1

- KAFKA_HEAP_OPTS=-Xmx1024m -Xms1024m

- KAFKA_MESSAGE_MAX_BYTES=2000000

- KAFKA_CFG_LISTENERS=PLAINTEXT://:9092,CONTROLLER://:9093

- KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://${log_ip_1}:9092

- KAFKA_CFG_NODE_ID=1

- KAFKA_CFG_CONTROLLER_QUORUM_VOTERS=1@${log_ip_1}:9093,2@${log_ip_2}:9093,3@${log_ip_3}:9093

- ALLOW_PLAINTEXT_LISTENER=yes

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT

volumes:

- kafka-data:/kafka

restart: always

mongo1:

container_name: mongo1

image: docker-bkrepo.cwoa.net/ce1b09/weops-docker/mongo:4.2.0

restart: always

command: ['--replSet', 'rs0', '--bind_ip', '${log_ip_1}']

network_mode: host

volumes:

- mongo-data:/data/db

datainsight1:

image: docker-bkrepo.cwoa.net/ce1b09/weops-docker/datainsight:20240510

container_name: datainsight1

network_mode: host

command: java -Xmx2048m -Xms2048m -jar /products/graylog.jar server -f /products/graylog.conf

volumes:

- ./configs/graylog.conf:/products/graylog.conf:ro

- datainsight-data:/products/data/journal

volumes:

opensearch-data:

kafka-data:

mongo-data:

datainsight-data:

EOF

cat << EOF > /data/weops/datainsight/configs/graylog.conf

is_master = true

node_id_file = /tmp/node_id

password_secret = y5ti4vCzfZF1shu9k4VQGgNev9Kw5GXBKmzgREJAZ1KqGOEpBMS5bDOLgBYCgcHhRdE5inudVcqai8FBsUJS90Npm83lAIlc

root_password_sha2 = ca3f47dd6bb65e12a711ebb33a65cf2148b7de5bbbf98049f1410fae14626deb

root_timezone = Asia/Shanghai

bin_dir = bin

data_dir = data

plugin_dir = plugin

http_bind_address = 0.0.0.0:9000

elasticsearch_hosts = http://${log_ip_1}:9200,http://${log_ip_2}:9200,http://${log_ip_3}:9200

rotation_strategy = count

elasticsearch_max_docs_per_index = 20000000

elasticsearch_max_number_of_indices = 20

retention_strategy = delete

elasticsearch_shards = 4

elasticsearch_replicas = 0

elasticsearch_index_prefix = graylog_dev

allow_leading_wildcard_searches = false

allow_highlighting = false

elasticsearch_analyzer = standard

output_batch_size = 500

output_flush_interval = 1

output_fault_count_threshold = 5

output_fault_penalty_seconds = 30

processbuffer_processors = 5

outputbuffer_processors = 3

processor_wait_strategy = blocking

ring_size = 65536

inputbuffer_ring_size = 65536

inputbuffer_processors = 2

inputbuffer_wait_strategy = blocking

message_journal_enabled = true

message_journal_dir = data/journal

lb_recognition_period_seconds = 3

mongodb_uri = mongodb://${log_ip_1}:27017,${log_ip_2}:27017,${log_ip_3}:27017/graylog_dev

mongodb_max_connections = 1000

mongodb_threads_allowed_to_block_multiplier = 5

proxied_requests_thread_pool_size = 32

http_publish_uri = http://${log_ip_1}:9000/

EOF

启动实例

docker-compose -f /data/weops/datainsight/docker-compose.yaml up -d注册 consul

## 在中控机执行

# 注册 datainsight,需要注意给下方 ip 手动赋值

export log_ip_1=

req=$(cat << EOF

{

"service": {

"id": "datainsight-1",

"name": "datainsight",

"address": "${log_ip_1}",

"port": 9000,

"check": {

"tcp": "${log_ip_1}:9000",

"interval": "10s",

"timeout": "3s"

}

}

}

EOF

)

curl --request PUT --data "$(jq -r .service <<<"$req")" "http://127.0.0.1:8500/v1/agent/service/register"

# 注册 kafka

req=$(cat << EOF

{

"service": {

"id": "datainsight-kafka-1",

"name": "datainsight-kafka",

"address": "${log_ip_1}",

"port": 9092,

"check": {

"tcp": "${log_ip_1}:9092",

"interval": "10s",

"timeout": "3s"

}

}

}

EOF

)

curl --request PUT --data "$(jq -r .service <<<"$req")" "http://127.0.0.1:8500/v1/agent/service/register"日志节点二执行

部署日志模块组件

## 需要注意给下方 ip 手动赋值,并确保三台日志节点都可以互相通信

# 分别为三台日志服务器的 ip

export log_ip_1=

export log_ip_2=

export log_ip_3=

mkdir -p /data/weops/datainsight/configs

cat << EOF > /data/weops/datainsight/docker-compose.yaml

version: '3.1'

services:

opensearch2:

container_name: opensearch2

image: docker-bkrepo.cwoa.net/ce1b09/weops-docker/opensearch:v1.1.0

network_mode: host

volumes:

- opensearch-data:/usr/share/opensearch/data

environment:

- node.name=${log_ip_2}

- node.master=true

- cluster.name=datainsight

- discovery.seed_hosts=${log_ip_1},${log_ip_3}

- cluster.initial_master_nodes=${log_ip_1},${log_ip_2},${log_ip_3}

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms1024m -Xmx1024m"

- "plugins.security.disabled=true"

ulimits:

memlock:

soft: -1

hard: -1

kafka2:

image: docker-bkrepo.cwoa.net/ce1b09/weops-docker/bitnami/kafka:3.6

container_name: kafka2

network_mode: host

environment:

- KAFKA_KRAFT_MODE=true

- KAFKA_KRAFT_CLUSTER_ID=bI5SSUmBTnemLV8WoOjGfw

- KAFKA_CFG_CONTROLLER_LISTENER_NAMES=CONTROLLER

- KAFKA_CFG_PROCESS_ROLES=controller,broker

- KAFKA_CFG_INTER_BROKER_LISTENER_NAME=PLAINTEXT

- KAFKA_KRAFT_BROKER_ID=2

- KAFKA_HEAP_OPTS=-Xmx1024m -Xms1024m

- KAFKA_MESSAGE_MAX_BYTES=2000000

- KAFKA_CFG_LISTENERS=PLAINTEXT://:9092,CONTROLLER://:9093

- KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://${log_ip_2}:9092

- KAFKA_CFG_NODE_ID=2

- KAFKA_CFG_CONTROLLER_QUORUM_VOTERS=1@${log_ip_1}:9093,2@${log_ip_2}:9093,3@${log_ip_3}:9093

- ALLOW_PLAINTEXT_LISTENER=yes

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT

volumes:

- kafka-data:/kafka

restart: always

mongo2:

container_name: mongo2

image: docker-bkrepo.cwoa.net/ce1b09/weops-docker/mongo:4.2.0

restart: always

command: ['--replSet', 'rs0', '--bind_ip', '${log_ip_2}']

network_mode: host

volumes:

- mongo-data:/data/db

datainsight2:

image: docker-bkrepo.cwoa.net/ce1b09/weops-docker/datainsight:20240510

container_name: datainsight2

network_mode: host

command: java -Xmx2048m -Xms2048m -jar /products/graylog.jar server -f /products/graylog.conf

volumes:

- ./configs/graylog.conf:/products/graylog.conf:ro

- datainsight-data:/products/data/journal

volumes:

kafka-data:

mongo-data:

opensearch-data:

datainsight-data:

EOF

cat << EOF > /data/weops/datainsight/configs/graylog.conf

node_id_file = /tmp/node_id

password_secret = y5ti4vCzfZF1shu9k4VQGgNev9Kw5GXBKmzgREJAZ1KqGOEpBMS5bDOLgBYCgcHhRdE5inudVcqai8FBsUJS90Npm83lAIlc

root_password_sha2 = ca3f47dd6bb65e12a711ebb33a65cf2148b7de5bbbf98049f1410fae14626deb

root_timezone = Asia/Shanghai

bin_dir = bin

data_dir = data

plugin_dir = plugin

http_bind_address = 0.0.0.0:9000

elasticsearch_hosts = http://${log_ip_1}:9200,http://${log_ip_2}:9200,http://${log_ip_3}:9200

rotation_strategy = count

elasticsearch_max_docs_per_index = 20000000

elasticsearch_max_number_of_indices = 20

retention_strategy = delete

elasticsearch_shards = 4

elasticsearch_replicas = 0

elasticsearch_index_prefix = graylog_dev

allow_leading_wildcard_searches = false

allow_highlighting = false

elasticsearch_analyzer = standard

output_batch_size = 500

output_flush_interval = 1

output_fault_count_threshold = 5

output_fault_penalty_seconds = 30

processbuffer_processors = 5

outputbuffer_processors = 3

processor_wait_strategy = blocking

ring_size = 65536

inputbuffer_ring_size = 65536

inputbuffer_processors = 2

inputbuffer_wait_strategy = blocking

message_journal_enabled = true

message_journal_dir = data/journal

lb_recognition_period_seconds = 3

mongodb_uri = mongodb://${log_ip_1}:27017,${log_ip_2}:27017,${log_ip_3}:27017/graylog_dev

mongodb_max_connections = 1000

mongodb_threads_allowed_to_block_multiplier = 5

proxied_requests_thread_pool_size = 32

http_publish_uri = http://${log_ip_2}:9000/

EOF

启动实例

docker-compose -f /data/weops/datainsight/docker-compose.yaml up -d注册 consul

## 在中控机执行

# 注册 datainsight,需要注意给下方 ip 手动赋值

export log_ip_2=

req=$(cat << EOF

{

"service": {

"id": "datainsight-2",

"name": "datainsight",

"address": "${log_ip_2}",

"port": 9000,

"check": {

"tcp": "${log_ip_2}:9000",

"interval": "10s",

"timeout": "3s"

}

}

}

EOF

)

curl --request PUT --data "$(jq -r .service <<<"$req")" "http://127.0.0.1:8500/v1/agent/service/register"

# 注册 kafka

req=$(cat << EOF

{

"service": {

"id": "datainsight-kafka-2",

"name": "datainsight-kafka",

"address": "${log_ip_2}",

"port": 9092,

"check": {

"tcp": "${log_ip_2}:9092",

"interval": "10s",

"timeout": "3s"

}

}

}

EOF

)

curl --request PUT --data "$(jq -r .service <<<"$req")" "http://127.0.0.1:8500/v1/agent/service/register"日志节点三执行

部署日志模块组件

## 需要注意给下方 ip 手动赋值,并确保三台日志节点都可以互相通信

# 分别为三台日志服务器的 ip

export log_ip_1=

export log_ip_2=

export log_ip_3=

mkdir -p /data/weops/datainsight/configs

cat << EOF > /data/weops/datainsight/docker-compose.yaml

version: '3.1'

services:

opensearch3:

container_name: opensearch3

image: docker-bkrepo.cwoa.net/ce1b09/weops-docker/opensearch:v1.1.0

network_mode: host

volumes:

- opensearch-data:/usr/share/opensearch/data

environment:

- node.name=${log_ip_3}

- node.master=true

- cluster.name=datainsight

- discovery.seed_hosts=${log_ip_1},${log_ip_2}

- cluster.initial_master_nodes=${log_ip_1},${log_ip_2},${log_ip_3}

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms1024m -Xmx1024m"

- "plugins.security.disabled=true"

ulimits:

memlock:

soft: -1

hard: -1

kafka3:

image: docker-bkrepo.cwoa.net/ce1b09/weops-docker/bitnami/kafka:3.6

container_name: kafka3

network_mode: host

environment:

- KAFKA_KRAFT_MODE=true

- KAFKA_KRAFT_CLUSTER_ID=bI5SSUmBTnemLV8WoOjGfw

- KAFKA_CFG_CONTROLLER_LISTENER_NAMES=CONTROLLER

- KAFKA_CFG_PROCESS_ROLES=controller,broker

- KAFKA_CFG_INTER_BROKER_LISTENER_NAME=PLAINTEXT

- KAFKA_KRAFT_BROKER_ID=3

- KAFKA_HEAP_OPTS=-Xmx1024m -Xms1024m

- KAFKA_MESSAGE_MAX_BYTES=2000000

- KAFKA_CFG_LISTENERS=PLAINTEXT://:9092,CONTROLLER://:9093

- KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://${log_ip_3}:9092

- KAFKA_CFG_NODE_ID=3

- KAFKA_CFG_CONTROLLER_QUORUM_VOTERS=1@${log_ip_1}:9093,2@${log_ip_2}:9093,3@${log_ip_3}:9093

- ALLOW_PLAINTEXT_LISTENER=yes

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT

volumes:

- kafka-data:/kafka

restart: always

mongo3:

container_name: mongo3

image: docker-bkrepo.cwoa.net/ce1b09/weops-docker/mongo:4.2.0

restart: always

command: ['--replSet', 'rs0', '--bind_ip', '${log_ip_3}']

network_mode: host

volumes:

- mongo-data:/data/db

datainsight3:

image: docker-bkrepo.cwoa.net/ce1b09/weops-docker/datainsight:20240510

container_name: datainsight3

network_mode: host

command: java -Xmx2048m -Xms2048m -jar /products/graylog.jar server -f /products/graylog.conf

volumes:

- ./configs/graylog.conf:/products/graylog.conf:ro

- datainsight-data:/products/data/journal

volumes:

kafka-data:

mongo-data:

opensearch-data:

datainsight-data:

EOF

cat << EOF > /data/weops/datainsight/configs/graylog.conf

node_id_file = /tmp/node_id

password_secret = y5ti4vCzfZF1shu9k4VQGgNev9Kw5GXBKmzgREJAZ1KqGOEpBMS5bDOLgBYCgcHhRdE5inudVcqai8FBsUJS90Npm83lAIlc

root_password_sha2 = ca3f47dd6bb65e12a711ebb33a65cf2148b7de5bbbf98049f1410fae14626deb

root_timezone = Asia/Shanghai

bin_dir = bin

data_dir = data

plugin_dir = plugin

http_bind_address = 0.0.0.0:9000

elasticsearch_hosts = http://${log_ip_1}:9200,http://${log_ip_2}:9200,http://${log_ip_3}:9200

rotation_strategy = count

elasticsearch_max_docs_per_index = 20000000

elasticsearch_max_number_of_indices = 20

retention_strategy = delete

elasticsearch_shards = 4

elasticsearch_replicas = 0

elasticsearch_index_prefix = graylog_dev

allow_leading_wildcard_searches = false

allow_highlighting = false

elasticsearch_analyzer = standard

output_batch_size = 500

output_flush_interval = 1

output_fault_count_threshold = 5

output_fault_penalty_seconds = 30

processbuffer_processors = 5

outputbuffer_processors = 3

processor_wait_strategy = blocking

ring_size = 65536

inputbuffer_ring_size = 65536

inputbuffer_processors = 2

inputbuffer_wait_strategy = blocking

message_journal_enabled = true

message_journal_dir = data/journal

lb_recognition_period_seconds = 3

mongodb_uri = mongodb://${log_ip_1}:27017,${log_ip_2}:27017,${log_ip_3}:27017/graylog_dev

mongodb_max_connections = 1000

mongodb_threads_allowed_to_block_multiplier = 5

proxied_requests_thread_pool_size = 32

http_publish_uri = http://${log_ip_3}:9000/

EOF

启动实例

docker-compose -f /data/weops/datainsight/docker-compose.yaml up -d注册 consul

## 在中控机执行

# 注册 datainsight,需要注意给下方 ip 手动赋值

export log_ip_3=

req=$(cat << EOF

{

"service": {

"id": "datainsight-3",

"name": "datainsight",

"address": "${log_ip_3}",

"port": 9000,

"check": {

"tcp": "${log_ip_3}:9000",

"interval": "10s",

"timeout": "3s"

}

}

}

EOF

)

curl --request PUT --data "$(jq -r .service <<<"$req")" "http://127.0.0.1:8500/v1/agent/service/register"

# 注册 kafka

req=$(cat << EOF

{

"service": {

"id": "datainsight-kafka-3",

"name": "datainsight-kafka",

"address": "${log_ip_3}",

"port": 9092,

"check": {

"tcp": "${log_ip_3}:9092",

"interval": "10s",

"timeout": "3s"

}

}

}

EOF

)

curl --request PUT --data "$(jq -r .service <<<"$req")" "http://127.0.0.1:8500/v1/agent/service/register"配置 mongodb 集群

## 集群配置

# 在日志节点一执行

docker exec -it mongo bash

mongo

# 手动修改 log_ip 为实际 ip

rs.initiate()

rs.add({host:"{log_ip_2}:27017","priority":1, "votes":1})

rs.add({host:"{log_ip_3}:27017","priority":1, "votes":1})

rs.status().members # 可以找到三台 mongo ip 即可

# 创建完成后验证看 datainsight 是否有连接 db 的报错:

docker logs --tail=50 -f datainsightWeOps 初始化配置

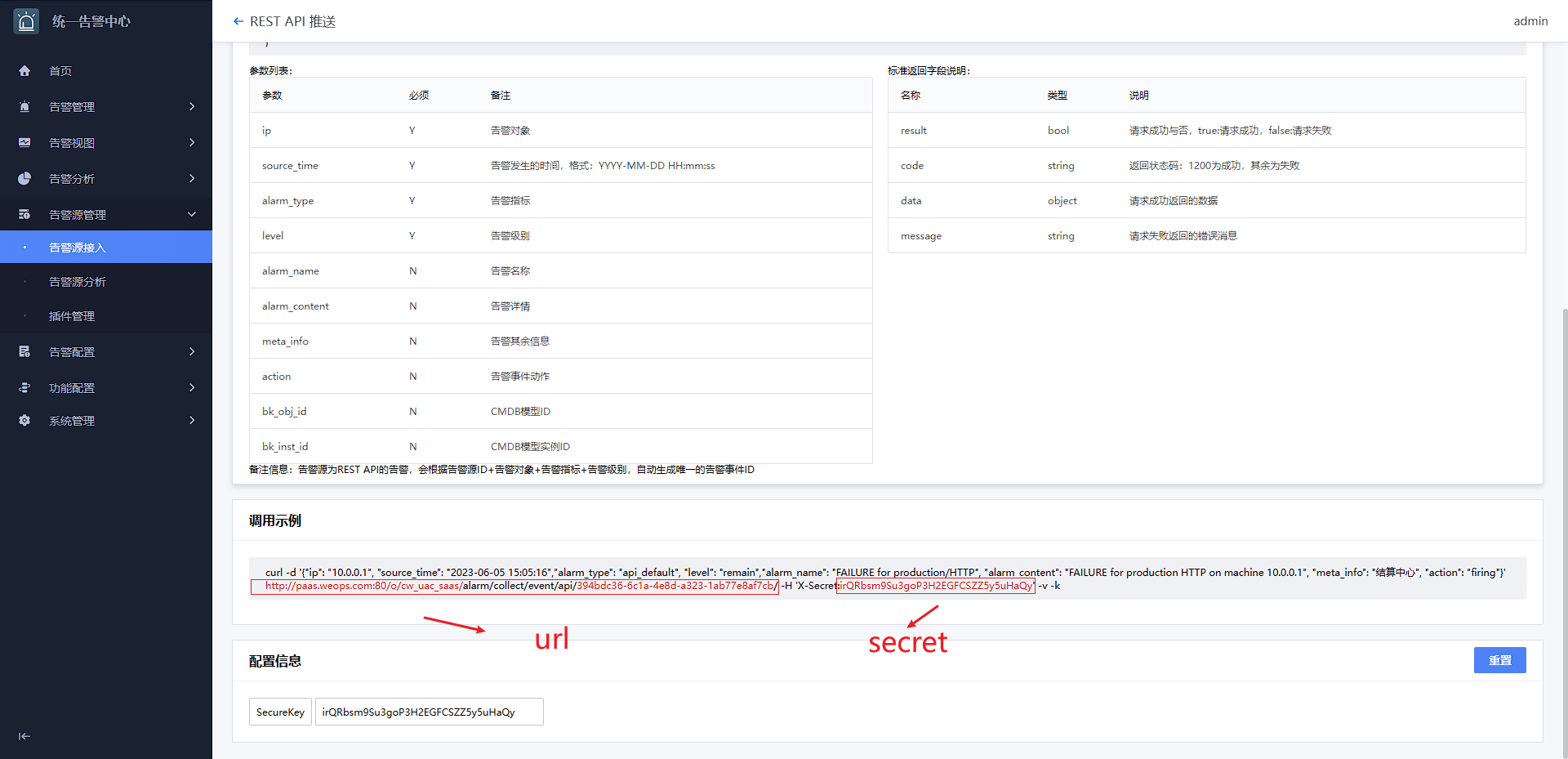

告警中心开启 restful api 告警源,获取 restful api 告警源 url 和 key

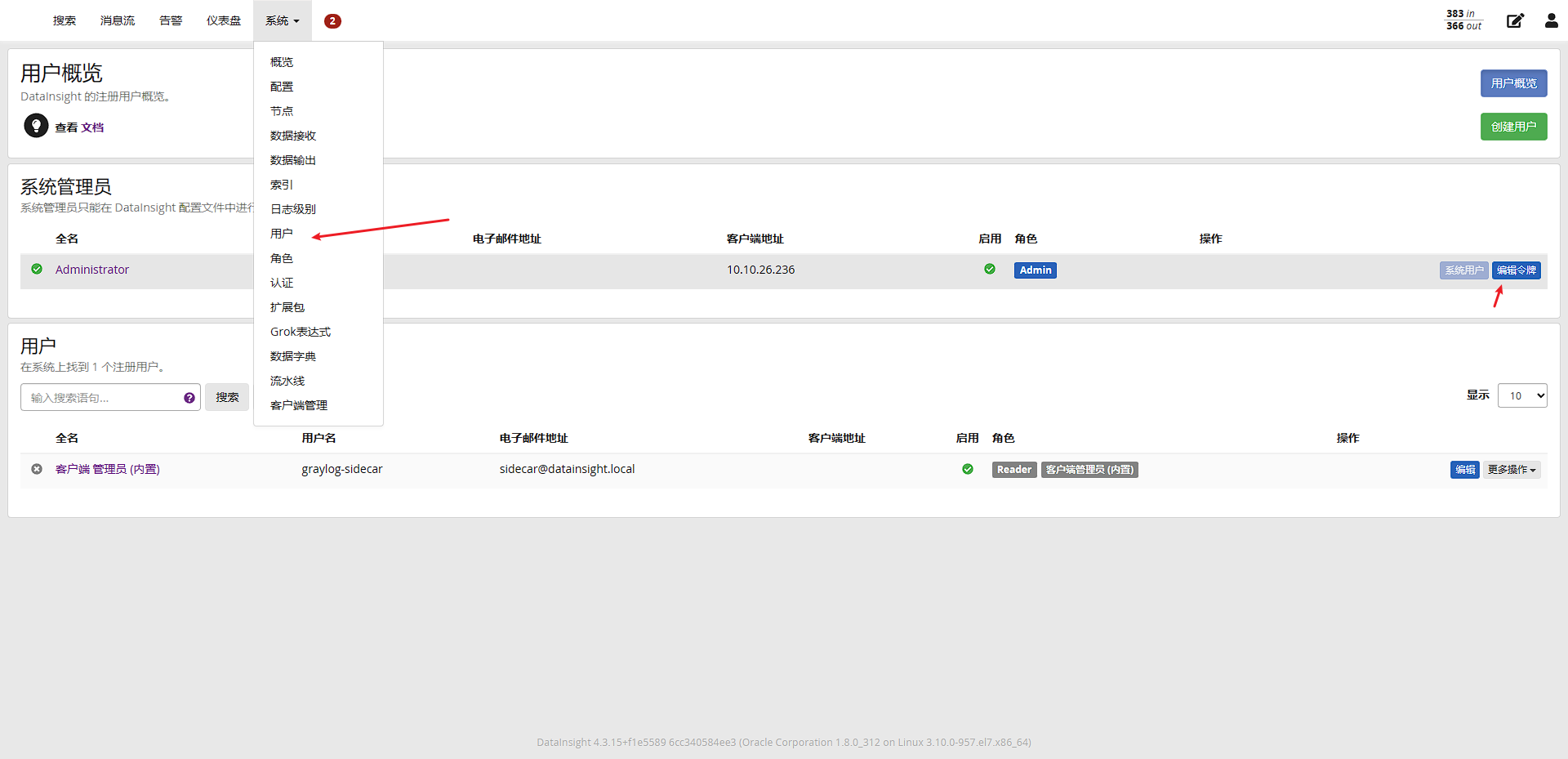

生成 datainsight 的 api token,先将 datainsight 的可视化页面代理出来

## 操作主机:nginx

cd /usr/local/openresty/nginx/conf/conf.d

touch datainsight.conf

vi datainsight.conf

# datainsight.conf 配置参考(注意替换实际端口、域名及IP地址):

server {

listen 80;

#listen 443 ssl;

server_name datainsight.weops.com;

location / {

proxy_pass http://xx.xx.xx.xx:9000;

}

}

# 保存配置后,测试并重载 nginx 使之生效

/usr/local/openresty/nginx/sbin/nginx -t

/usr/local/openresty/nginx/sbin/nginx -s reload

# 浏览器访问,注意配置 DNS 或添加 hosts 记录

# 默认用户和密码为 admin:datainsight-x

http://datainsight.weops.com/

新增WeOps SaaS 环境变量

| SaaS ID | 变量名(默认自带BKAPP前缀) | 变量值($开头值均为平台变量,需手动获取) |

|---|---|---|

| weops_saas | GRAYLOG_URL | http://datainsight.service.consul:9000 |

| weops_saas | GRAYLOG_AUTH | admin:datainsight-x 的 base64 编码:YWRtaW46ZGF0YWluc2lnaHQteA== |

| weops_saas | ALARM_SEND_REST_API | 需要在上述告警中心开启 restful 告警源,手动复制 curl 段中的 url |

| weops_saas | ALARM_SEND_SECRET | 需要在上述告警中心开启 restful 告警源,手动复制 curl 段中的 secret |

| weops_saas | GRAYLOG_API_TOKEN | 需要在上述 datainsight 可视化页面中生成 |

| weops_saas | LOG_OUTPUT_HOST | datainsight-kafka.service.consul:9092 |

4.4 部署 APM

待补充4.3 WeOps 基础设置配置 (3mins)

使用 weops_install.sh 脚本可自动完成一些配置项(如日志自动清理)

# 操作主机:中控机

# 操作路径:/data/weops

# 配置WeOps基础设置

source /data/install/utils.fc #若提示无此文件或文件夹,请重新ssh登录

cd /data/weops/install

bash weops_install.sh settings

# itsm 初始化 es 索引配置,在浏览器执行

{itsm_url}/api/api_quote_count/init_api_quote_count/4.4 重建bk_device模型分组并初始化 CMDB 模型 (8mins)

# 操作主机:中控机

# 操作路径:/data/weops/init_plugin_service

# 编辑替换config.py里的CONFIG_LIST、CMDB_URL和ESB_CONFIG中带注释部分

CONFIG_LIST = [

"admin", # 蓝鲸用户

"WeOps2023", # 蓝鲸用户密码

"http://paas.weops.net/", # paas地址

]

CMDB_URL = "http://cmdb.weops.net" # cmdb地址

ESB_CONFIG = {

"bk_app_code": "weops_saas",

"bk_app_secret": "7cd2e5c6-569e-4ca4-9ad8-13f0bee4d25c", # weops_saas应用TOKEN

"bk_username": "admin"

}

#修改完保存后执行 ini_devgroup.py 脚本重建为 bk_device 模型分组

python ini_devgroup.py

# 操作主机:客户端浏览器中登录平台后执行 migrate 初始化模型和指标分组

# 示例:http://paas.weops.net/o/weops_saas/resource/objects/migrate

{domain}/o/weops_saas/resource/objects/migrate

{domian}/o/weops_saas/monitor_mgmt/init_metric_group/4.5 监控插件及监控分组对象、告警分组对象初始化 (10mins)

# 操作主机:中控机

# 关闭监控平台的插件调试要求

mysql --login-path=mysql-default << "EOF"

update bkmonitorv3_alert.global_setting set `value`="true" where `key`="SKIP_PLUGIN_DEBUG";

EOF

# 操作路径:/data/weops/init_plugin_service

cd /data/weops/init_plugin_service

# 执行插件&监控分组对象&告警分组对象初始化

python init_plugin.py4.6 初始化仪表盘 (1mins)

已内置多个仪表盘模板,可以调用接口启用

# 操作主机:客户端浏览器中登录平台后直接执行

# 示例:http://paas.contoso.com/o/weops_saas/monitor_mgmt/init_dashboard/

{domain}/o/weops_saas/monitor_mgmt/init_dashboard/4.7 刷新权限及初始化模板

## 任意 APPO 服务器操作

## 初始化权限

docker exec -it $(docker ps | grep weops_saas | awk '{print $1}') bash

cd /data/app/code

export BK_FILE_PATH=/data/app/code/conf/saas_priv.txt

python manage.py reload_casbin_policy --delete

python manage.py init_role --update

## 初始化模板

python manage.py init_snmp_template5. 后台初始化配置 (10mins)

同三节点标准版本

6. 附录

6.1 需手动切换的组件说明

redis

蓝鲸现有部分服务不支持 redis sentinel,包括 usermgr,automate,weops,监控中心。

在默认部署时,service name为redis@default的实例有两个,在使用时仅注册ip为$BK_REDIS_IP0的redis实例。

如 $BK_REDIS_IP0 宕机不可用时,需手动进行切换:

#!/bin/bash

#在$BK_REDIS_IP1运行

source /etc/blueking/env/local.env

systemctl start redis@default

/data/install/bin/reg_consul_svc -D -n redis -p 6379 -a $LAN_IP | tee /etc/consul.d/service/redis.json

consul reload

# 检查redis.service.consul的解析是否正常指向$BK_REDIS_IP0

dig +short redis.service.consulmysql

mysql默认是一主二从的架构,mysql-default.service.consul的解析默认是master,当master宕机时,需手动切换:

bash mysql_master-slave_switching-consul.sh

更多信息参考https://km.cwoa.net/doc/lib/7e9df556d9c04562b6ce443acb58f075/wiki/list/c256a8a55f5c4c57864484e798a4a988

age

age部署架构同redis,部署时仅启用一个节点,假如$BK_AGE_IP0宕机,需手动进行切换

#!/bin/bash

#在$BK_AGE_IP1进行

source /etc/blueking/env/local.env

docker start age

/data/install/bin/reg_consul_svc -D -n age -p 5432 -a $LAN_IP | tee /etc/consul.d/service/redis.json

consul reload

# 检查redis.service.consul的解析是否正常指向$BK_AGE_IP1

dig +short age.service.consul